Intelligent Agents for Data Analysis: How Autonomous AI Turns Information Chaos Into Competitive Advantage

You know the feeling.

It’s 11 PM on a Tuesday. Your laptop screen glows with seventeen open tabs—dashboards, spreadsheets, half-finished SQL queries. Somewhere in the 2.3 million rows of customer data sitting in your database, there’s an insight that could change next quarter’s strategy. You can feel it. But finding it? That would take your team three weeks of manual work you don’t have.

Meanwhile, your competitor just announced a product pivot that perfectly anticipated a market shift you only noticed last month.

They saw something you didn’t. They moved faster than should have been possible.

How?

The answer isn’t that they hired better analysts. It isn’t that they have more data. It’s that they stopped trying to analyze data themselves—and deployed intelligent agents to do the cognitive heavy lifting instead.

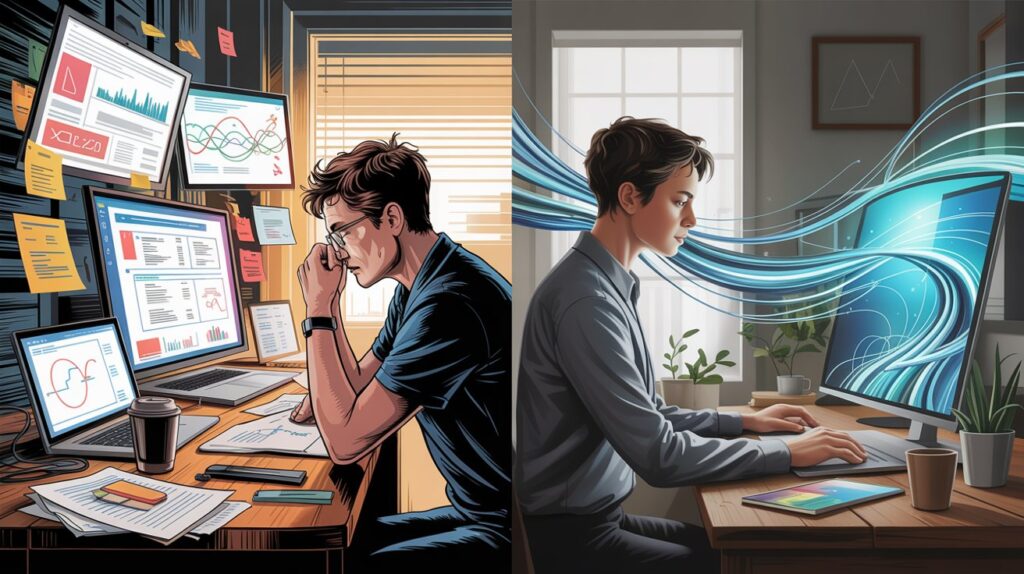

This is the new divide in data-driven organizations. Not between those with data and those without. Between those still drowning in information, and those who’ve outsourced the drowning to AI systems that never sleep, never tire, and never miss patterns hiding in plain sight.

The Data Deluge Problem Nobody Talks About Honestly

Here’s the uncomfortable truth the analytics industry doesn’t advertise: more data has made most organizations slower, not faster.

The average enterprise now generates 2.5 quintillion bytes of data daily. That’s a number so large it’s essentially meaningless. What is meaningful is this: according to Gartner research, nearly 87% of organizations have low business intelligence and analytics maturity. They’re collecting data at unprecedented scale while extracting insight at roughly the same rate as a decade ago.

The bottleneck isn’t storage. It isn’t computing power. It’s cognitive bandwidth.

Your data team—no matter how talented—has the same 24 hours as everyone else. Their working memory has limits. Fatigue sets in. Meetings interrupt. And unconscious biases shape what they pursue and what they overlook.

And every day, the gap widens between what your data contains and what your organization actually knows.

Picture your most experienced analyst sitting at their desk. They’ve spent eight hours building a report that executives will spend twelve minutes reading. Tomorrow, they’ll do it again. And again. While the most valuable patterns—the non-obvious correlations, the early warning signals, the opportunities hiding in customer behavior data—remain buried. Not because anyone’s incompetent. Because human attention is a finite resource being asked to process infinite information.

This is the trap. More dashboards don’t solve it. More hires barely dent it. The traditional analytics paradigm—where humans interrogate data through queries and tools—has hit a fundamental scaling wall.

Why Traditional Analytics Tools Keep Failing You

Let’s be direct about what business intelligence software actually does.

Traditional BI tools are reactive. They answer questions you already know to ask. They visualize data you’ve already decided is important. They’re sophisticated mirrors, reflecting back exactly what you point them at—and nothing more.

This is why organizations keep investing in new analytics platforms while insight velocity stays flat. The tool isn’t the bottleneck. The interrogation model is the bottleneck.

Consider the workflow: Human identifies potential question → Human writes query or configures dashboard → Tool retrieves and displays data → Human interprets results → Human decides next question → Repeat.

Every step requires human cognitive effort. Every step introduces delay. And critically, every step is limited by what the human already suspects might be true.

The insights that change businesses aren’t usually found through confirming existing hypotheses. They’re found by accident—by stumbling across anomalies nobody was looking for. But you can’t stumble across what you’re not examining. And you can’t examine 2.3 million rows manually.

This is why your competitor seemed to predict that market shift. They didn’t predict it, but had a system continuously examining patterns across their entire data ecosystem, 24/7, surfacing statistical anomalies no human ever thought to query for.

They had intelligent agents.

What Intelligent Agents Actually Do (The Cognitive Delegation Architecture)

Intelligent agents for data analysis represent a fundamental paradigm shift—from tools you operate to systems that operate on your behalf.

The distinction matters enormously. A tool waits for instructions. An agent takes initiative.

Here’s how the architecture works:

An intelligent data analysis agent combines three capabilities that, together, create something qualitatively different from any dashboard or BI platform:

Autonomous Exploration: The agent continuously scans your data environment without explicit prompting. It’s not waiting for queries. It’s actively searching for statistical anomalies, emerging trends, broken correlations, and patterns that deviate from historical baselines. Think of it as a tireless analyst who never stops asking, “What’s different? What changed? What doesn’t fit?”

Contextual Reasoning: Unlike simple alerting systems that flag any deviation, intelligent agents apply contextual judgment. They understand that a 5% revenue dip on a holiday weekend isn’t alarming—but the same dip on a normal Tuesday demands attention. They learn your business rhythms and distinguish signal from noise. This is where machine learning meets domain understanding, allowing agents to prioritize what actually matters.

Synthesized Communication: The agent doesn’t dump raw data on your desk. It translates findings into natural language insights, explains why something matters, and often suggests next steps. The output isn’t a spreadsheet. It’s a briefing.

The Judgment Layer

What makes this “cognitive delegation” rather than simple automation is the judgment layer. Traditional automation follows predetermined rules. Intelligent agents make contextual decisions about what’s worth investigating, how deeply to analyze it, and whether findings merit human attention.

The result? You’re no longer drowning in data or dashboards. You’re receiving curated intelligence from a system that understands your priorities and hunts for what you’d want to know—even when you didn’t know to ask.

According to MIT Sloan Management Review, organizations that implement autonomous analytical systems report 40% faster time-to-insight and 60% reduction in “data grunt work” for human analysts.

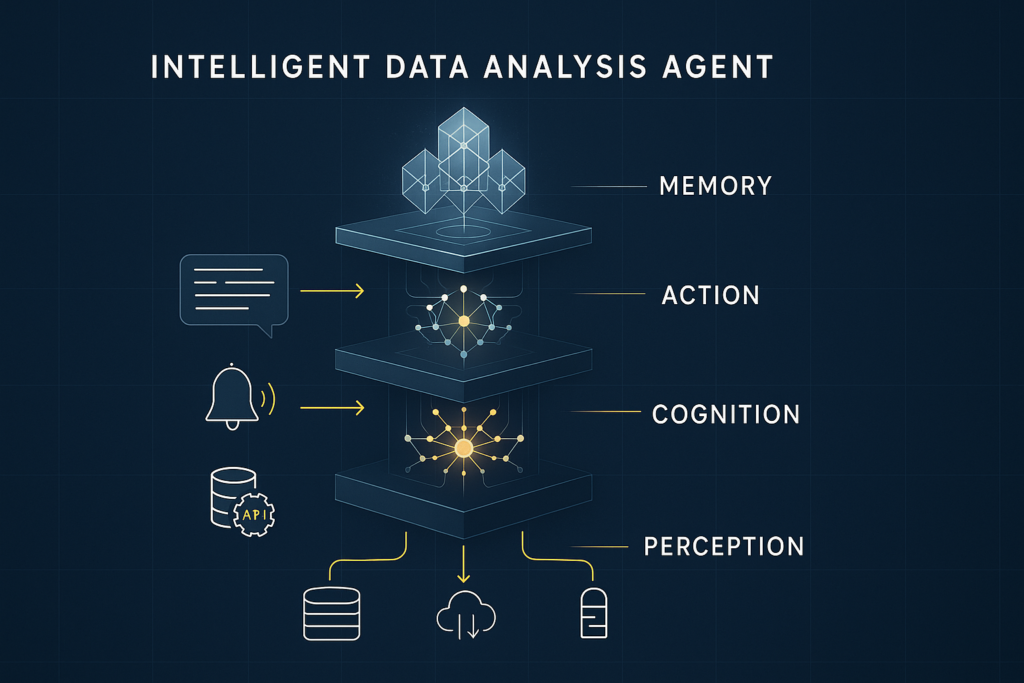

The Anatomy of an Intelligent Data Analysis Agent

Understanding the components helps demystify how these systems actually function.

Perception Layer

The agent must first see your data. This involves connectors to databases, APIs to SaaS platforms, integrations with data warehouses, and real-time streaming capabilities. Modern agents are designed for multi-source integration—pulling from your CRM, financial systems, web analytics, IoT sensors, and internal applications simultaneously.

The perception layer also handles data quality assessment. The agent notices when data feeds go stale, when values fall outside expected ranges, or when schemas change unexpectedly.

Cognition Layer

This is where the intelligence lives. The cognition layer combines statistical analysis engines for identifying anomalies and trends, machine learning models for pattern recognition and prediction, natural language processing for understanding queries and generating explanations, and knowledge graphs for understanding relationships between entities.

The cognition layer doesn’t operate on fixed rules. It learns what “normal” looks like for your specific business, adapts to seasonal patterns, and continuously refines its understanding of what constitutes a significant finding.

Action Layer

Intelligence without communication is worthless. The action layer determines how the agent delivers insights: prioritizing alerts by potential business impact, generating natural language summaries accessible to non-technical stakeholders, creating visualizations that highlight exactly what changed, triggering automated workflows when specific conditions are met, and integrating with collaboration tools where decisions actually happen.

Memory Layer

Unlike one-off queries, intelligent agents maintain persistent memory. They remember what they’ve found before, track how situations evolved, and understand the narrative arc of your data over time. This allows them to make statements like “This pattern is similar to what preceded the Q3 customer churn spike” rather than treating each analysis as isolated.

Real-World Applications: Where Agents Create Unfair Advantage

Theory matters less than results. Here’s where intelligent agents deliver concrete competitive advantage:

Financial Services: Risk That Sees Around Corners

A regional bank deploys intelligent agents across transaction data, market feeds, and customer behavior patterns. The agent notices a subtle correlation: customers who reduce direct deposit amounts by more than 15% show 340% higher probability of closing accounts within 90 days—but only when combined with decreased mobile app usage.

No human analyst queried for this specific combination. The agent found it through autonomous exploration.

The bank now intervenes with high-value customers before they consider leaving. Retention rates improve by 23%. Competitors, still relying on lagging indicators, lose the same customers they don’t see walking out the door.

E-Commerce: Demand Signals in the Noise

An online retailer’s agent monitors not just sales data, but returns, customer service tickets, social mentions, and search behavior. It surfaces an early warning: a specific product category is seeing 40% higher return rates in certain geographic regions, correlated with above-average temperatures.

The insight: the product packaging fails in heat. The agent caught this within two weeks of the pattern emerging. Traditional quarterly analysis would have found it three months later—after significant revenue loss and customer frustration.

Healthcare: Clinical Intelligence at Scale

Hospital networks deploy agents across electronic health records, lab results, and operational data. The agent identifies that patients admitted on Fridays with specific diagnostic codes have 18% longer length-of-stay than identical patients admitted Monday-Thursday—a staffing and discharge planning pattern invisible in aggregate statistics.

The finding drives targeted process improvements. Length-of-stay decreases. Bed capacity increases. Costs drop. All from a pattern buried in millions of records that no human had bandwidth to discover.

How to Deploy Intelligent Agents Without a Data Science Team

Here’s where many organizations assume they’re excluded from this capability. They think intelligent agents require dedicated AI teams, massive infrastructure investment, and months of custom development.

That assumption is increasingly outdated.

The agent landscape has matured significantly. Today’s options include:

Platform-Based Agents

Solutions like Microsoft’s Copilot for analytics, Google’s Looker AI features, and specialized platforms such as Dataiku offer agent-like capabilities within existing enterprise environments. These require minimal custom development—you’re deploying pre-built intelligence frameworks that learn your specific data.

Custom Agent Frameworks

For organizations with specific needs, frameworks like LangChain and AutoGen allow development teams to build tailored agents without starting from scratch. The heavy lifting of the cognitive architecture is handled; you’re configuring for your use case rather than inventing new AI.

Managed Services

A growing category of data services companies will deploy and manage intelligent agents on your behalf—providing the capability without requiring internal expertise.

The practical deployment path for most organizations:

1. Identify a bounded domain: Don’t try to agent-ify your entire data estate at once. Pick a specific business area with clear data sources and measurable outcomes—customer churn, inventory optimization, financial close anomalies.

2. Establish baselines: Before deploying the agent, document current time-to-insight, analyst hours spent, and insight quality for that domain. You need comparison points.

3. Deploy in shadow mode: Let the agent analyze in parallel with existing processes. Compare its findings to human-generated insights. This builds trust and surfaces calibration needs.

4. Iterate on feedback loops: The agent learns from feedback. When it surfaces irrelevant findings, you tell it. When it misses something important, you train on the gap. The system improves continuously.

5. Expand gradually: Once one domain demonstrates value, extend to adjacent areas. The same agent architecture often transfers across use cases with limited reconfiguration.

The Future Belongs to Those Who Delegate

There’s a moment in the transition—perhaps you’ve already felt it—when the anxiety inverts.

At first, delegating analytical thinking to an autonomous system feels like surrender. Like admitting you can’t keep up. Like losing control.

Then something shifts. The agent surfaces an insight at 3 AM that would have taken your team until next Friday to find. You walk into a Monday meeting knowing something your competitors won’t discover for weeks. You realize your analysts are spending time on strategic synthesis instead of data wrangling—because the wrangling is handled.

Picture yourself six months from now. The notification chimes softly on your phone during your morning routine. You glance at the screen. Your intelligent agent has identified an emerging pattern in customer behavior—a shift that correlates with a market trend nobody’s talking about yet. The insight is clear. The recommended action is specific. The supporting evidence is linked.

What You Probably Did Not Do

You didn’t:

- stay up late wrestling with spreadsheets

- wait for quarterly reports

- miss the signal in the noise

You delegated the cognitive labor. And now you’re seeing what others can’t.

This is the divide we’re living through. Between organizations still treating data analysis as a human-centric craft that scales with headcount, and those who’ve recognized that intelligence itself can be delegated—freeing human cognition for judgment, creativity, and decision-making that no agent can replicate.

The data deluge isn’t slowing down. Your cognitive bandwidth isn’t expanding. The question isn’t whether intelligent agents will transform data analysis—that transformation is already underway.

The question is whether you’ll be among those who weaponize it, or those still wondering how competitors move so fast.

The tools exist. The architecture is proven. The competitive advantage compounds with every day of deployment.

The chaos is waiting to become clarity.

The only question left is how much longer you’re willing to drown.

Conclusion: From Overwhelm to Unfair Advantage

The promise of intelligent agents for data analysis isn’t incremental improvement. It’s a fundamental restructuring of the relationship between humans and data.

The Mass Desire driving this shift—escape from complexity, competitive advantage—isn’t going away. If anything, as data volumes accelerate and market cycles compress, the pressure intensifies. Those still relying on human-powered, query-based analytics aren’t just falling behind. They’re fighting a battle with obsolete weapons.

The Unique Mechanism—Cognitive Delegation Architecture—works because it removes the bottleneck. Not data storage. Not processing power. Attention. When intelligent agents handle the exhausting, time-consuming work of pattern detection and anomaly surfacing, human analysts transform from data wrestlers into strategic directors.

Your organization’s next breakthrough insight is almost certainly buried in data you already have. The question is whether a human will eventually stumble upon it—if they have time, if they happen to look in the right place, if they notice the pattern—or whether an intelligent agent will surface it tomorrow.

The choice is yours. But understand what you’re choosing between: continued drowning, or strategic clarity.

The agents are ready.

Are you?

Recommended External Reading: IBM’s Introduction to Intelligent Agents | Stanford HAI’s Research on AI Agents